NVMCached: An NVM-based Key-Value Cache

Xingbo Wu, Fan Ni, Li Zhang, Yandong Wang, Yufei Ren, Michel Hack, Zili Shao, and Song Jiang, in Proceedings of the 7th ACM SIGOPS Asia-Pacific Workshop on Systems, 2016.

This is a short (6 page) paper that discusses the challenges of taking a memory based key-value cache (e.g., memcached) and converting it to use a non-volatile memory. Because the authors do not have NVM available, they focus on simulating it using DRAM. They simulate behaviors by using appropriate cache flushes and fence. In fact, they use CLFLUSH and MFENCE, which may be a bit more than is required.

Here is an interesting thing for people familiar with Intel processor architecture for more than about the past 15 years: the CPUs no longer provide strong ordering guarantees. If you’ve learned or refreshed your knowledge about the memory consistency models more recently, then you are likely already familiar with this. One of the interesting issues with NVM is that we need to think about memory consistency in a way that’s never been required previously. The authors of this paper do this.

They note that flushes are expensive; they argue for using “consistency-friendly data structures” for minimizing the number of flushes required, which certainly makes sense, particularly given that they are expensive. Some operations (like anything with the LOCK prefix) are performed atomically as well and are conceptually similar; both tie into the processor’s cache architecture (for those that have been around a while, the cache line semantics for LOCK were introduced around 1995.)

One of the important observations in this paper is that the reason to use NVM is that it allows fast restart after a system failure, such as a crash or power event. For memcached, there are users that are asking for persistence. Even if the service is only a cache, it turns out that offloading to the cache can be a powerful mechanism for improving overall throughput, but the larger the cache becomes, the higher the cost when the cache gets tossed after a system crash.

However, this also simplifies the problem at hand: discarding data in this type of system is of minimal concern. They utilize checksums to detect damaged data structures, which alleviates their need to perform ordered write operations; they reorganize their data structures to permit changes with only infrequent fencing. They point out the problem of zombie data (data that is deleted, then the system crashes, so the data returns after the system reboots). To prevent this situation they choose to split their data structures.

The checksums prove to be much less expensive than flush; the authors claim up to 20x faster (my own reading indicates that using the optimized CRC32 built into modern Intel CPUs the cost is just over 1 clock cycle per byte checksummed, so a 64 byte cache line would have a cost of approximately 70 clock cycles; on a 2.0GHz processor, that would be roughly 17.5 nanoseconds, which certainly is somewhere between 5 and 20 times faster than writing back a cache line.

The authors also touch on the challenges of memory allocation. Memory fragmentation is something we often fix in dynamic memory by rebooting the machine. However, now we have the storage level problem with fragmentation; fixing this involves write amplification. They organize their allocation strategy by observing there is considerable locality across the KV store. They combine a log structured allocation scheme with a “zone based” allocation model and attempt to combine cleaning, eviction, and updates to minimize the impact on performance. They also use DRAM to track access information as part of their LRU implementation. This creates a burden on crash recovery, but benefits from significant improved runtime performance.

They also implement a DRAM write combining cache; the idea is that when an item is detected to be “hot” the item is cached in DRAM. Those hot items will thus be discarded if the system crashes.

Their evaluation is against a modified version of memcached. They utilize four Facebook traces; interestingly they do not use one (VAR) because “it is write-dominant with only a few distinct keys touched.” I am not certain that I see why this makes it unsuitable for use, though I suspect they do not obtain favorable behavior in the face of it.

They evaluate the performance of native memcached versus their NVM-enhanced version with a series of micro-benchmarks after a restart event. They show that in several cases it takes more than 1 billion requests before the performance of the DRAM memcached to converge with the NVM enhanced version. When they use the “real world” Facebook memached traces, they observe that three of the benchmarks show comparable performance. Two of them, SYS and VAR, show substantially better performance. Both benchmarks are write intensive. I admit, this surprised me, since I am not certain why writing to (simulated) NVM should be substantially after than writing to DRAM.

Overall, it is interesting work and touches on important challenges for working with NVM as well as improving system performance.

MRAMFS: A compressing file system for non-volatile RAM

Nathan K. Edel, Deepa Tuteja, Ethan L. Miller, and Scott A. Brandt in Proceedings of the 12th IEEE/ACM International Symposium on Modeling, Analysis, and Simulation of Computer and Telecommunication Systems (MASCOTS 2004), Volendam, Netherlands, October 2004.

This paper allows me to provide both a file systems paper and look at an interesting approach to byte-addressable non-volatile memory (NVM).

We have developed a prototype in-memory file system which utilizes data compression on inodes, and which has preliminary support for compression of file blocks. Our file system, mramfs, is also based on data structures tuned for storage efficiency in non-volatile memory.

One of the interesting aspects of NVM is that it has characteristics of storage (persistence) and memory (byte-addressability). Storage people are used to having vast amounts of time to do things: it is quite difficult, though not impossible, to do anything computationally with data that will be an important factor when it is combined with the overhead of I/O latency to disk drives. In-memory algorithms worry about optimal cache line usage and efficient usage of the processor, but they don’t need to worry about what happens when the power goes off.

Bringing these two things together requires re-thinking things. NVM isn’t as fast as DRAM. Storage people aren’t used to worrying about CPU cache effects on data resilience.

So mramfs looks at this from a very file systems centric perspective: how do we exploit this nifty new memory to build a new kind of RAM disk: it’s still RAM but now it’s persistent. NVRAM is slower than DIMM and hence it makes sense to compress it to increase the effective data transfer rate (though it is not clear if that really will be the case.)

I didn’t find a strong motivation for compression, though I can see the viability of it now, in a world in which we want to pack as much as we can into a 64 byte cache line. The authors point out that one of the previous systems (Conquest) settled on a 53 byte inode size. The authors studied existing systems and found they could actually compress down to 20 bytes (or less) for a single inode. They achieved this using a combination of gamma compression and compressing common file patterns (mode, uid, and gid). Another reason for this approach is they did not wish to burden their file system with a computationally expensive compression scheme.

In Figure 1 (from the paper) the authors provide a graphic description of their data structures. This depicts a fairly traditional UNIX style file system, with an inode table, name space (directories), references from directory entries to the inodes. Inodes then point to control structures that eventually map to the actual data blocks.

In Figure 1 (from the paper) the authors provide a graphic description of their data structures. This depicts a fairly traditional UNIX style file system, with an inode table, name space (directories), references from directory entries to the inodes. Inodes then point to control structures that eventually map to the actual data blocks.

The actual memory is managed by the file system from a single chunk of non-volatile memory; the memory is virtually addressed and the paper points out that they don’t actually care how that mapping is achieved.

Multiple inodes are allocated together in inode blocks with each block consisting of 16 (variable length) inodes. The minimum size of a block is 256 bytes. inodes are rewritten in place whenever possible, which can lead to slack space. If an inode doesn’t fit within its existing space, the entire block is reconstructed and then written to a new block. Aftewards, the block pointer is changed to point to the new block. Then the old block is freed.

One thing that is missing from this is much reasoning about crash consistency, which surprised me.

The authors have an extensive evaluation section, comparing to ext2fs, ramfs, and jffs2 (all over RAM disk). Their test was a create/unlink micro-benchmark, thus optimizing the meta-data insertion/deletion case. They then questioned their entire testing mechanism by pointing out that the time was also comparable to what they achieved using tmpfs building the openssl package from source. Their final evaluation was done without the compression code enabled (“[U]nfortuantely, the data compression code is not yet reliable enough to complete significant runs of Postmark or of large builds…”). They said they were getting about 20-25% of the speed without compression.

Despite this finding, their conclusion was “We have shown that both metadata and file data blocks are highly compressisble with little increase in code complexity. By using tuned compression techniques, we can save more than 60% of the inode space required by previous NVRAM file systems, and with little impact on performance.”

My take-away? This was an early implementation of a file system on NVM. It demonstrates one of the risks of thinking too much in file systems terms. We’ll definitely have to do better.

NV-Heaps: Making Persistent Objects Fast and Safe with Next-Generation, Non-Volatile Memories

Joel Coburn, Adrian M. Caulfield, Ameen Akel, Laura M. Grupp, Rajesh K. Gupta, Ranjit Jhala, Steven Swanson

in ASPLOS XVI Proceedings of the sixteenth international conference on Architectural support for programming languages and operating systems, Pages 105-118, March 5-11, 2011.

This paper was presented at the same conference as Mnemosyne. The authors explore a different use of Non-Volatile Memory (NVM): using it for storing persistent objects. The authors sum up the motivation for this:

Creating these data structures requires a system that is lightweight enough to expose the performance of the underlying memories but also ensures safety in the presence of application and system failures by avoiding familiar bugs such as dangling pointers, multiple free()s, and locking errors. In addition, the system must prevent new types of hard-to-find pointer safety bugs that only arise with persistent objects. These bugs are especially dangerous since any corruption they cause will be permanent.

Thus, their motivation is to enable the use of these nifty “persistent, user-defined objects” that are not practical when backed by disks (“[T]he slow speed of persistent storage (i.e., disk) has restricted their design and limited their performance.”)

The authors make some important observations that are just as applicable today as they were in 2011. These include the observation that persistent objects in NVM cannot reasonably be treated like disk based objects “… because the gap between memory and storage performance drove many design decisions that shaped them.” Nor can they be treated like volatile memory: “To guarantee consistency and durability, non-volatile structures must meet a host of challenges, many of which do not exist for volatile memories.”

They also observe that NVMs greatly expand the possibility of bug sources from having disparate address spaces. In other words, while you have a single address space, part of it is ephemeral and if you store a reference to the ephemeral part in the persistent part, it will be inconsistent after the current process terminates.

I found their observation about the ability of programmers to reason about this also apropos: “[t]rusting the average programmer

to “get it right” in meeting these challenges is both unreasonable…” This is consistent with more than 50 years of experience in systems. Personally, I don’t think this is an indictment of the programmer so much as it is a burden on the system (a perspective the authors appear to endorse as well). To make this viable, we need to make it easy to get it right.

Figure 1 shows the general architecture of NV-Heaps: It is envisioned as a library of useful services layered on top of the operating system provided abstractions. One important observation here is that this model completely avoids the need to interact with the operating system in ordinary program execution. Persistence no longer relies upon utilizing the standard file systems interface.

The authors’ explanation of their goals looks like a veritable “wish list” to me: prevent programmer errors, transactions, referential integrity, performance and scalability, and ease of use. I’m not sure how referential integrity is different than programmer errors, but clearly it is a very important aspect of their persistent system.

Figure 3 shows how they handle one of the complex consistency cases inherent in managing NVM: the need to ensure that operations can be safely restarted. For example, when deleting a large data structure, such as a tree, it must be removed in a way that it can be stopped and restarted (e.g., if the system were to crash, it must then be able to resume removal). To resume after a crash, they use a log of operations and replay it – a classic solution to the problem.

Figure 3 shows how they handle one of the complex consistency cases inherent in managing NVM: the need to ensure that operations can be safely restarted. For example, when deleting a large data structure, such as a tree, it must be removed in a way that it can be stopped and restarted (e.g., if the system were to crash, it must then be able to resume removal). To resume after a crash, they use a log of operations and replay it – a classic solution to the problem.

To make their goal of referential integrity work properly they utilize the programming language constructs to do this. The authors note they achieve this by using 128 bit pointer values (on a 64 bit system).

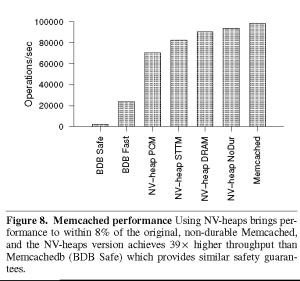

The paper describes their implementation in considerable detail. Again, as we would expect, the implementation yields substantially better performance than comparable systems backed by disks – this really shouldn’t come as a surprise, given the performance differential between disks and non-volatile memory. Even if they had used solid state disks (which existed but were rare in 2011) their results would have still be notably better. Figure 8 shows their performance information, comparing themselves against several other systems. One thing to note: they do not have NVM memory. They use a memory simulator to model the behavior of the system. The performance figures they provide surprised me: they are substantially faster than I would have expected. For PCM, they used a 67 nano-second (ns) read time and 215 ns write time. The paper explains how they obtained these values and how they validated them. For STTM (a different NVM technology) they reported 29 ns read and 95 ns write. As a baseline, their DRAM read time was 25 ns, and write time was 35 ns.

While these numbers were lower than I would have expected, the relative ratio is close to what I expected from other things that I have read: PCM memory is about 2.5 times slower for reads, and 10 times slower for writes. This is consistent with what the paper reports. I guess it’s time to update my mental “Jeff Dean” numbers. And indeed, it turns out that DRAM latency is around 15 ns.

The authors were able to modify memcached to use their library for persistence. They report that they were able to get within 8% of the original memcached. That seems like an excellent outcome.

All we need now are NVMs.

Mnemosyne: Lightweight Persistent Memory

Haris Volos, Andres Jaan Tack, Michael M. Swift, ASPLOS ’11 March 5-11, 2011.

The abstract starts us off in this brave new world:

New storage-class memory (SCM) technologies, such as phase-change memory, STT-RAM, and memristors, promise user-levelvaccess to non-volatile storage through regular memory instructions. These memory devices enable fast user-mode access to persistence, allowing regular in-memory data structures to survive system crashes.

So faster, doesn’t require privilege, works like memory, and persistent. Pretty fancy stuff.

File systems aren’t really constructed to have direct access to the disk from user applications. Generally it is done via an I/O interface: open, close, read, and write. But memory isn’t accessed in that fashion at all. So, how does this affect things? What do the services look like? What does it mean to take something everyone thinks of as transient and make it persistent?

Let’s start exploring!

Mnemosyne provides an explicit mechanism for exposing persistent memory to applications. This is done by extending the programming tools so they can declare something should be stored in persistent memory, or so that it can be dynamically allocated with the proviso that it be allocated from this persistent memory.

Thus, the default is that an existing application retains the same behavior – it does not use persistent memory. If an application wishes to use persistent memory it must be modified to do so. Mnemosyne will provide a basic service level, but it won’t change the behavior of existing applications (technical debt really does follow us around in this business).

It’s impressive: “… Mnemosyne can persist data as fast as 3 microseconds.” It makes existing applications modified to use it much faster. Figure 1 (from the paper) describes the architecture the authors created for Mnemosyne.

This architecture envisions the persistent memory being exposed to the application through a persistence interface; the motivation for this is that merely having persistent memory is not enough. It requires additional work to ensure that it is crash resistant. In other words, the system can restore the state of the contents in memory to some well-defined consistent state.

This architecture envisions the persistent memory being exposed to the application through a persistence interface; the motivation for this is that merely having persistent memory is not enough. It requires additional work to ensure that it is crash resistant. In other words, the system can restore the state of the contents in memory to some well-defined consistent state.

This is something file systems routinely handle – the issues of persistence and recoverability. I often try to think about failure: how does failure manifest? How do I know that I can recover the state to a consistent spot and then proceed?

This is an uncommon concept for most application developers: they don’t need to worry about the contents of memory being “consistent” in the face of crashes because when the application crashes, the memory is lost.

Mnemosyne provides a model of consistency for applications by creating an explicit mechanism for providing crash consistence. Note that Mnemosyne won’t define those consistent states – the application must define what it means for its data structures to be consistent. What Mnemosyne offers are certain guarantees about the contents of memory.

The authors’ decision to virtualize their SCM is an interesting one: “[V]irtualization prevents a memory leak in one program from monopolizing a finite amount of SCM.” Thus, they stage SCM content to disk between processes. Consistency of data is provided by “ordering writes”. The authors identify four consistency mechanisms:

- Atomic variable update – update the data in place as a single all-or-nothing operation.

- Append updates – data is not written in place, but rather a new copy is written, such as it might be to the end of a log (such updates are ordered).

- Shadow updates – data is written to a new location and once done, the pointer to the old copy is updated to point to the new copy (e.g., via an atomic variable update). The authors point out there is a potential leak here that must be handled properly.

- In-place updates – used for data structures that can be modified in place; provided the operations are ordered.

Consistency guarantees for persistent memory are accomplished using processor semantics and mechanisms:

- A write through operation (e.g., a temporal move) that is written directly to memory.

- Memory fences that ensure strict ordering of operations before the fence relative to operations after the fence.

- Cache line flushes. The CPU stores memory inside the processor while it is acting upon it. In fact, a modern CPU has multiple levels of memory. The most expensive (and smallest) will be the Level 1 cache. It’s also the fastest. L2 cache is larger and slower than L1 cache. L3 cache is typically shared with all CPUs on the processor; it is the largest and slowest of the caches.

For storage people, some of this is familiar and some of it is different – instead of worrying about storage stack semantics we’re now worrying about processor cache semantics. One upside is that processor semantics are more rigidly enforced than storage semantics (e.g., disk drives that lie and say that the data has been written when it hasn’t.) One downside is that it’s a new failure domain. For anyone used to working with persistent storage, understanding the failure domain is vital. I suspect it is also different for people used to thinking about the processor perspective, since persistence isn’t usually something you have to reason about.

Mnemosyne implemented a persistent heap allocator, a modified version of Intel’s STM Compiler (we’ll see later that others had to move that work to other compilers because it is now abandoned), a logging mechanism, a persistent region mechanism, a transactional system (based upon TinySTM).

Their results are, of course, good. After all, if they had not been good, they wouldn’t have been published. They outperform BerkeleyDB (for some metrics). They demonstrated a fast and persistent red-black tree implementation. They show the benefits of asynchronous truncation.

Mnemosyne was a useful contribution because it was an early exploration into considering how we should use byte-addressable non-volatile memory. The library they built is used in future work as well, and this is a heavily cited paper.

Recent Comments